Introduction

Bring your AI development from the data center to your desk with NVIDIA DGX™ Spark. NVIDIA DGX Spark puts the DGX operating system, NVIDIA Grace Blackwell superchip, and the performance capabilities in the hands of developers and engineers outside the data center.

Instead of relying on cloud, borrowing resources from your computing infrastructure, or relying on a traditional workstation, the NVIDIA DGX Spark delivers 128GB of memory and 1 petaFLOPS of AI performance in a 6” x 6” box.

Harness enterprise AI development in a small form factor and deploy NVIDIA DGX Spark within your team for unparalleled performance density. We will review what DGX Spark can power in your AI development workflow.

Bring it Everywhere You Go

The NVIDIA DGX Spark is an AI development platform with the NVIDIA GB10 Grace Blackwell Superchip at the heart, featuring 1 petaFLOPS of AI performance, preinstalled with NVIDIA’s AI software stack, and 128GB of unified memory. All packed in a portable DGX stylized mini-PC, the DGX Spark is ideal for:

- Small Scale AI Fine-tuning & Deployment: Fine-tuning and running local LLMs & Generative AI

- AI Prototyping: Explore different AI architectures on an abstracted device that directly ports back to your data center.

- Media & Creativity: Local AI video generation, Stable Diffusion, and AI-assisted production

- Education & Research: Universities can use DGX Spark for hands-on AI curriculum research.

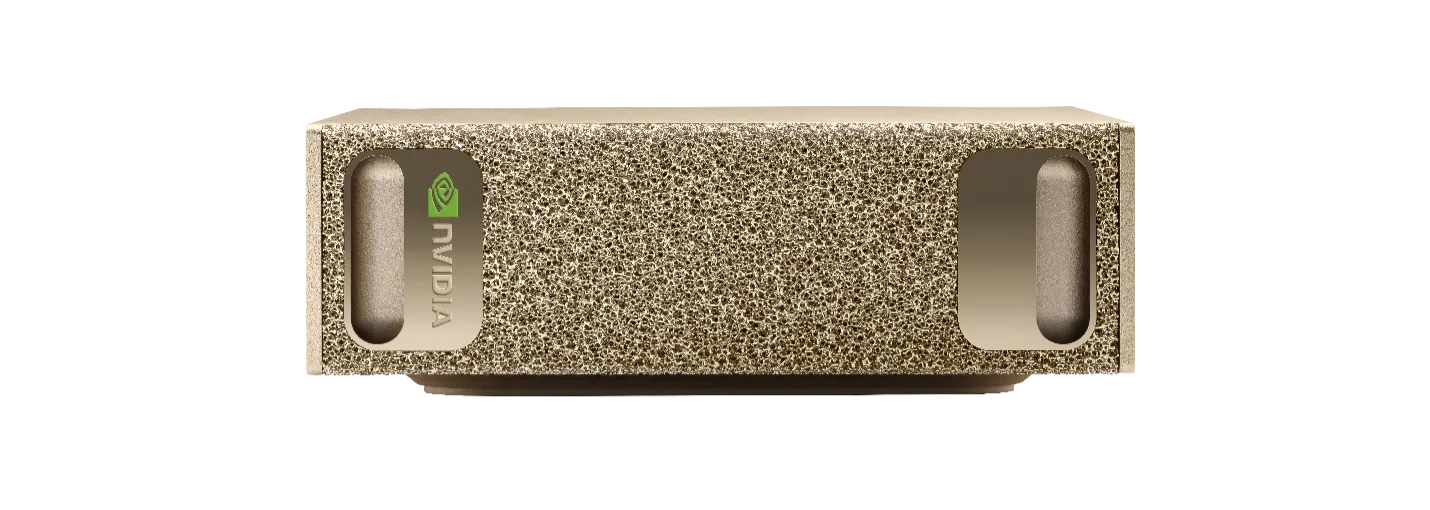

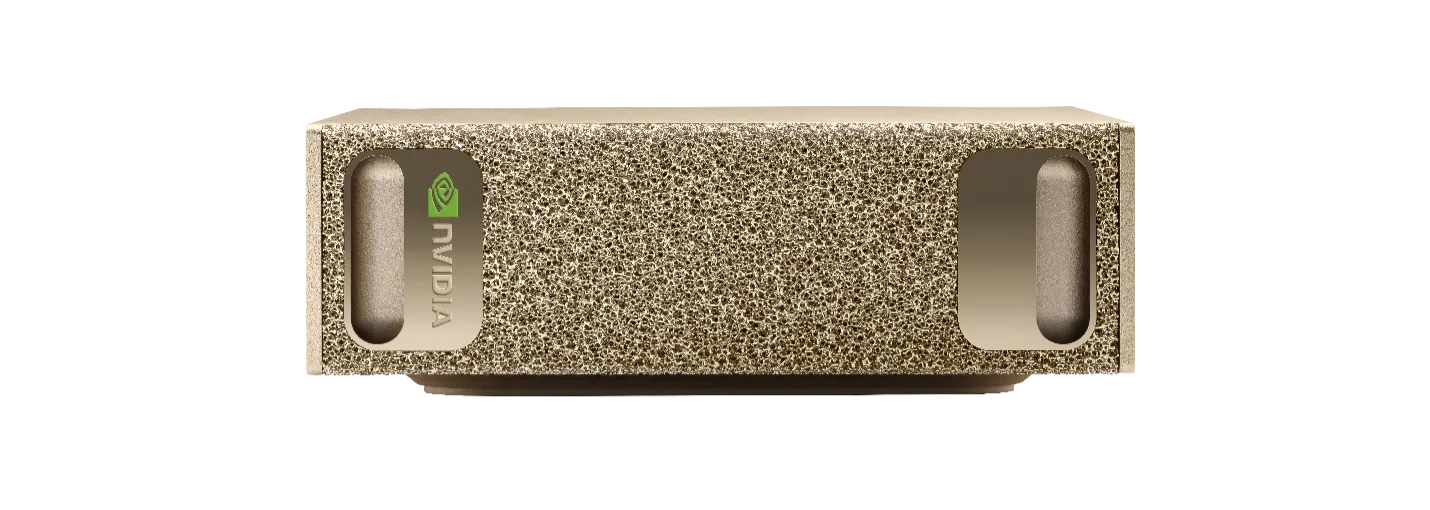

NVIDIA DGX Spark is a super portable AI workstation to bring along with you everywhere. DGX Spark fits in your backpack so your team can bring compute to studios, data centers, and labs. This deployment flexibility and scalability to enterprise computing infrastructure define the DGX Spark. It’s everything NVIDIA envisioned for the data center, packed into a small 6” x 6” x 2” gold chassis.

To honor its legacy, the NVIDIA DGX Spark sports an all-gold chassis with an organic mesh for the front and rear, modeled after the original DGX-1 shipped back in 2016. Get a quote for a bulk NVIDIA DGX Spark order today.

- NVIDIA GB10 Grace Blackwell Superchip: Up to 1 petaFLOPS of AI performance at FP4 precision and 31 TFLOPS of FP32.

- 128GB of unified CPU-GPU memory: Unified memory removes the data transfer bottleneck between CPU and GPU, so developers can prototype, fine-tune, and run inference locally optimally.

- NVIDIA ConnectX networking for clustering and NVIDIA NVLink-C2C for 5x PCIe bandwidth. Developers can connect a second NVIDIA DGX Spark for increased computing performance and memory for even larger AI models.

NVIDIA DGX Spark Specifications

NVIDIA DGX Spark AI Performance

Instead of relying on cloud computing and API calls, DGX Spark has strong performance in fine-tuning, image generation, data science, and inference workloads. NVIDIA posted throughput on AI workloads with the NVIDIA DGX Spark.

NVIDIA DGX Spark for Local LLM Inference

The DGX Spark delivers impressive token generation speed across a wide variety of models. Current top-of-the-line workstation GPUs, RTX PRO 6000, feature 96GB of VRAM, whereas the DGX Spark offers more at 128GB of unified memory. This allows you to run larger models locally, even if it means slightly lower performance than the RTX PRO 6000 Blackwell. In our professional opinion, over 20 tokens per second is usable.

| Model | Precision | Backend | Prompt processing throughput(tokens/sec) | Token generation throughput(tokens/sec) |

| Qwen3 14B | NVFP4 | TRT-LLM | 5928.95 | 22.71 |

| GPT-OSS-20B | MXFP4 | llama.cpp | 3670.42 | 82.74 |

| GPT-OSS-120B | MXFP4 | llama.cpp | 1725.47 | 55.37 |

| Llama 3.1 8B | NVFP4 | TRT-LLM | 10256.9 | 38.65 |

| Qwen2.5-VL-7B-Instruct | NVFP4 | TRT-LLM | 65831.77 | 41.71 |

| Qwen3 235B(on dual DGX Spark) | NVFP4 | TRT-LLM | 23477.03 | 11.73 |

NVIDIA DGX Spark for Fine-Tuning

With the portability of DGX Spark, developers can leverage powerful compute in a dense, on-the-go workstation for fine-tuning and experimentation before pushing to production. DGX Spark puts custom AI models in the hands of everyone, from enthusiasts to developers to businesses alike.

| Model | Method | Backend | Configuration | Peak tokens/sec |

| Llama 3.2 3B | Full fine-tuning | PyTorch | Sequence length: 2048 Batch size: 8 Epoch: 1 Steps: 125 BF16 | 82,739.20 |

| Llama 3.1 8B | LoRA | PyTorch | Sequence length: 2048 Batch size: 4 Epoch: 1 Steps: 125 BF16 | 53,657.60 |

| Llama 3.3 70B | QLoRA | PyTorch | Sequence length: 2048 Batch size: 8 Epoch: 1 Steps: 125 FP4 | 5,079.04 |

NVIDIA DGX Spark for Image Generation

With 128GB of unified memory and up to 1 petaFLOPS of compute, DGX Spark supports high-resolution image workflows and complex generative models. Developers can leverage FP4 precision for rapid iteration speeds or maintain fidelity when quality is critical.

| Model | Precision | Backend | Configuration | Images/min |

| Flux.1 12B Schnell | FP4 | TensorRT | Resolution: 1024×1024 Denoising steps: 4 Batch size: 1 | 23 |

| SDXL1.0 | BF16 | TensorRT | Resolution: 1024×1024 Denoising steps: 50 Batch size: 2 | 7 |

FAQ about NVIDIA DGX Spark

What makes the DGX Spark different from other AI development systems?

DGX Spark brings NVIDIA's enterprise-grade DGX architecture into a compact form factor abstracted from the main computing resources. With up to 1 petaFLOPS of AI performance and 128GB of memory AI developers and researchers essentially have a portable data center that fits in a backpack, enabling them to prototype, fine-tune, and deploy AI models anywhere.

Who is the DGX Spark designed for?

DGX Spark is built for AI developers, engineers, researchers, and enthusiasts who want local, high-performance compute for AI development/prototyping, fine-tuning, and local inference. DGX Spark uses the same backend as enterprise NVIDIA hardware, so you can port your models directly to the data center or cloud.

What operating system does DGX Spark run?

DGX Spark runs the DGX OS, the same environment used in NVIDIA's data center systems. It is also preloaded with NVIDIA's full AI software stack, including CUDA, cuDNN, TensorRT, and preconfigured Docker containers fully compatible with popular frameworks like PyTorch, TensorFlow, and JAX.

Can I cluster multiple DGX Spark units together?

Yes. With built-in ConnectX-7 networking (200 Gbps), you can connect multiple DGX Sparks for increased memory pool, parallel processing, distributed model training, and more.

Where can I purchase the DGX Spark?

DGX Spark is available through Exxact Corp., featuring the NVIDIA DGX Spark Founder's Edition model!

Conclusion

NVIDIA DGX Spark changes how AI professionals access enterprise-level computing power, delivering datacenter-class capabilities in a compact workstation. Empower your engineers and innovators to augment foundational AI, run personal LLM inference, and prototype new AI solutions without infrastructure constraints.

Combining cutting-edge Grace Blackwell architecture with seamless software integration and expandable connectivity options, the DGX Spark is purpose-built for those who want mobility, performance, and compatibility with the NVIDIA ecosystem.

Order NVIDIA DGX Spark through Exxact Corporation, an Elite NVIDIA Partner. Unlock unprecedented performance and flexibility for your next breakthrough project.

Accelerate AI Training an NVIDIA DGX Spark

Take enterprise AI compute anywhere. NVIDIA DGX Spark delivers up to 1 petaFLOPS in a portable 6" x 6" x 2" form factor—datacenter power in your backpack. Available now through Exxact Corporation.

Get a Quote Today

NVIDIA DGX Spark - AI Supercomputer Wherever You Go

Introduction

Bring your AI development from the data center to your desk with NVIDIA DGX™ Spark. NVIDIA DGX Spark puts the DGX operating system, NVIDIA Grace Blackwell superchip, and the performance capabilities in the hands of developers and engineers outside the data center.

Instead of relying on cloud, borrowing resources from your computing infrastructure, or relying on a traditional workstation, the NVIDIA DGX Spark delivers 128GB of memory and 1 petaFLOPS of AI performance in a 6” x 6” box.

Harness enterprise AI development in a small form factor and deploy NVIDIA DGX Spark within your team for unparalleled performance density. We will review what DGX Spark can power in your AI development workflow.

Bring it Everywhere You Go

The NVIDIA DGX Spark is an AI development platform with the NVIDIA GB10 Grace Blackwell Superchip at the heart, featuring 1 petaFLOPS of AI performance, preinstalled with NVIDIA’s AI software stack, and 128GB of unified memory. All packed in a portable DGX stylized mini-PC, the DGX Spark is ideal for:

- Small Scale AI Fine-tuning & Deployment: Fine-tuning and running local LLMs & Generative AI

- AI Prototyping: Explore different AI architectures on an abstracted device that directly ports back to your data center.

- Media & Creativity: Local AI video generation, Stable Diffusion, and AI-assisted production

- Education & Research: Universities can use DGX Spark for hands-on AI curriculum research.

NVIDIA DGX Spark is a super portable AI workstation to bring along with you everywhere. DGX Spark fits in your backpack so your team can bring compute to studios, data centers, and labs. This deployment flexibility and scalability to enterprise computing infrastructure define the DGX Spark. It’s everything NVIDIA envisioned for the data center, packed into a small 6” x 6” x 2” gold chassis.

To honor its legacy, the NVIDIA DGX Spark sports an all-gold chassis with an organic mesh for the front and rear, modeled after the original DGX-1 shipped back in 2016. Get a quote for a bulk NVIDIA DGX Spark order today.

- NVIDIA GB10 Grace Blackwell Superchip: Up to 1 petaFLOPS of AI performance at FP4 precision and 31 TFLOPS of FP32.

- 128GB of unified CPU-GPU memory: Unified memory removes the data transfer bottleneck between CPU and GPU, so developers can prototype, fine-tune, and run inference locally optimally.

- NVIDIA ConnectX networking for clustering and NVIDIA NVLink-C2C for 5x PCIe bandwidth. Developers can connect a second NVIDIA DGX Spark for increased computing performance and memory for even larger AI models.

NVIDIA DGX Spark Specifications

NVIDIA DGX Spark AI Performance

Instead of relying on cloud computing and API calls, DGX Spark has strong performance in fine-tuning, image generation, data science, and inference workloads. NVIDIA posted throughput on AI workloads with the NVIDIA DGX Spark.

NVIDIA DGX Spark for Local LLM Inference

The DGX Spark delivers impressive token generation speed across a wide variety of models. Current top-of-the-line workstation GPUs, RTX PRO 6000, feature 96GB of VRAM, whereas the DGX Spark offers more at 128GB of unified memory. This allows you to run larger models locally, even if it means slightly lower performance than the RTX PRO 6000 Blackwell. In our professional opinion, over 20 tokens per second is usable.

| Model | Precision | Backend | Prompt processing throughput(tokens/sec) | Token generation throughput(tokens/sec) |

| Qwen3 14B | NVFP4 | TRT-LLM | 5928.95 | 22.71 |

| GPT-OSS-20B | MXFP4 | llama.cpp | 3670.42 | 82.74 |

| GPT-OSS-120B | MXFP4 | llama.cpp | 1725.47 | 55.37 |

| Llama 3.1 8B | NVFP4 | TRT-LLM | 10256.9 | 38.65 |

| Qwen2.5-VL-7B-Instruct | NVFP4 | TRT-LLM | 65831.77 | 41.71 |

| Qwen3 235B(on dual DGX Spark) | NVFP4 | TRT-LLM | 23477.03 | 11.73 |

NVIDIA DGX Spark for Fine-Tuning

With the portability of DGX Spark, developers can leverage powerful compute in a dense, on-the-go workstation for fine-tuning and experimentation before pushing to production. DGX Spark puts custom AI models in the hands of everyone, from enthusiasts to developers to businesses alike.

| Model | Method | Backend | Configuration | Peak tokens/sec |

| Llama 3.2 3B | Full fine-tuning | PyTorch | Sequence length: 2048 Batch size: 8 Epoch: 1 Steps: 125 BF16 | 82,739.20 |

| Llama 3.1 8B | LoRA | PyTorch | Sequence length: 2048 Batch size: 4 Epoch: 1 Steps: 125 BF16 | 53,657.60 |

| Llama 3.3 70B | QLoRA | PyTorch | Sequence length: 2048 Batch size: 8 Epoch: 1 Steps: 125 FP4 | 5,079.04 |

NVIDIA DGX Spark for Image Generation

With 128GB of unified memory and up to 1 petaFLOPS of compute, DGX Spark supports high-resolution image workflows and complex generative models. Developers can leverage FP4 precision for rapid iteration speeds or maintain fidelity when quality is critical.

| Model | Precision | Backend | Configuration | Images/min |

| Flux.1 12B Schnell | FP4 | TensorRT | Resolution: 1024×1024 Denoising steps: 4 Batch size: 1 | 23 |

| SDXL1.0 | BF16 | TensorRT | Resolution: 1024×1024 Denoising steps: 50 Batch size: 2 | 7 |

FAQ about NVIDIA DGX Spark

What makes the DGX Spark different from other AI development systems?

DGX Spark brings NVIDIA's enterprise-grade DGX architecture into a compact form factor abstracted from the main computing resources. With up to 1 petaFLOPS of AI performance and 128GB of memory AI developers and researchers essentially have a portable data center that fits in a backpack, enabling them to prototype, fine-tune, and deploy AI models anywhere.

Who is the DGX Spark designed for?

DGX Spark is built for AI developers, engineers, researchers, and enthusiasts who want local, high-performance compute for AI development/prototyping, fine-tuning, and local inference. DGX Spark uses the same backend as enterprise NVIDIA hardware, so you can port your models directly to the data center or cloud.

What operating system does DGX Spark run?

DGX Spark runs the DGX OS, the same environment used in NVIDIA's data center systems. It is also preloaded with NVIDIA's full AI software stack, including CUDA, cuDNN, TensorRT, and preconfigured Docker containers fully compatible with popular frameworks like PyTorch, TensorFlow, and JAX.

Can I cluster multiple DGX Spark units together?

Yes. With built-in ConnectX-7 networking (200 Gbps), you can connect multiple DGX Sparks for increased memory pool, parallel processing, distributed model training, and more.

Where can I purchase the DGX Spark?

DGX Spark is available through Exxact Corp., featuring the NVIDIA DGX Spark Founder's Edition model!

Conclusion

NVIDIA DGX Spark changes how AI professionals access enterprise-level computing power, delivering datacenter-class capabilities in a compact workstation. Empower your engineers and innovators to augment foundational AI, run personal LLM inference, and prototype new AI solutions without infrastructure constraints.

Combining cutting-edge Grace Blackwell architecture with seamless software integration and expandable connectivity options, the DGX Spark is purpose-built for those who want mobility, performance, and compatibility with the NVIDIA ecosystem.

Order NVIDIA DGX Spark through Exxact Corporation, an Elite NVIDIA Partner. Unlock unprecedented performance and flexibility for your next breakthrough project.

Accelerate AI Training an NVIDIA DGX Spark

Take enterprise AI compute anywhere. NVIDIA DGX Spark delivers up to 1 petaFLOPS in a portable 6" x 6" x 2" form factor—datacenter power in your backpack. Available now through Exxact Corporation.

Get a Quote Today

.jpg?format=webp)